Home

About

Links

Home

About

Links

Hawi - Phase 2

Posted 03/29/2010 by Emil Valkov

Contact:

Contact:

After being entirely remote controlled, Hawi makes its first steps (or rather wheel turns) on its own. I did lot of software upgrades to achieve this, some of which are not necessary right now, but will hopefully pay in the future.

The Hawi hardware is essentially the same as described in my previous article.

I only added an ultrasound pinger, which I'm not even using yet.

All the interesting upgrades are in the software.

Here's a video of the new version in action.

The setup

Hawi itself is a holonomic drive platform composed of 4 wheels that can oriented independently.

It's controlled by 3 Arduino Duemilanoves,

a phidgets servo controller,

and a cheap Asus Eee PC netbook. More details can be found here if needed.

Hawi itself doesn't have any sensors yet. You cannot count the ultrasound pinger yet, as its not used yet.

Instead I'm using another fixed laptop, which is connected to 2 webcams which look at Hawi. Like shown below:

The software running on the fixed laptop uses OpenCV

and the cvblob library, similarly to my robot arm.

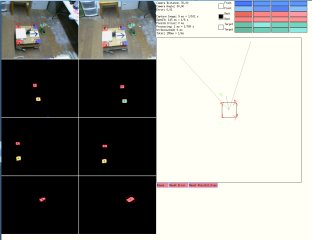

Here is a screenshot of the application running, where you can see the captured images from both cameras.

On top you can see the colors used to match the blobs. Those colors are input manually for now, and are fed into the cvbobs library to extract coordinates for the red, blue and green squares.

Those coordinates are then matched together between the left and right cameras. I can verify the match has been done correctly by looking at the overlay of numbers "0", "1", "2" and "3" on the top camera images.

0 is top-right, 1 is bottom-right, 2 is top-left and 3 is bottom left.

Finally, you can see a top-view schematic of Hawi, including the target, and calculated wheel orientations to drive hawi towards it.

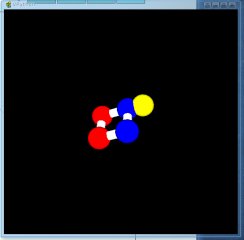

I can verify the 3D triangulated points, by sending then to a little visual python application I also made.

It a little 3D representation of Hawi and it's target in 3D.

With it I can zoom, pan and rotate to see what the computer sees from any angle.

Going Forward

This is just a start to prove that my software architecture works. All this is of course overkill just to move towards a target.

I didn't even need 3D / stereovision for this. Just a single camera above hawi would have been enough, and much simpler. But now Hawi has room to grow.

Stability is one of the main areas that need improvement, just like with the first versions of my robot arm.

This would allow me to increase the speed at which Hawi moves. Right now, I have to limit the speed of Hawi to about 1/5th of what the motors are capable of.

I tried it once at full speed and it just rammmed into the RC car.

Why the name Hawi?

Just for kicks, I didn't name Hawi randomly. It's actually an acronym spelled backwards and stands for "It Worked At Home". Which is pretty appropriate for a robot don't you think?